Many people use cloud providers on a regular basis. Running a virtual machine, launching a Kubernetes cluster and managing complex infrastructure components is only a click, or a command line away. As you can imagine, there is a great level of complexity in the internal mechanism used by a cloud provider to offer these services to customers.

In this article, we detail the internal architecture of SKS (Scalable Kubernetes Service), the Exoscale managed Kubernetes offering. It gives the reader a better understanding of what happens behind the hood each time a customer creates a cluster.

A brief introduction to the historical infrastructure

From the beginning Exoscale based its internal infrastructure on Apache CloudStack, an open-source cloud computing platform used to manage a large network of virtual machines. CloudStack is thus an orchestrator of VMs. To develop its own internal platform, Exoscale built additional orchestrators to manage other types of resources like pool of virtual machines, network load balancer, private network, Kubernetes clusters, … These orchestrators are running outside of CloudStack as we will see below.

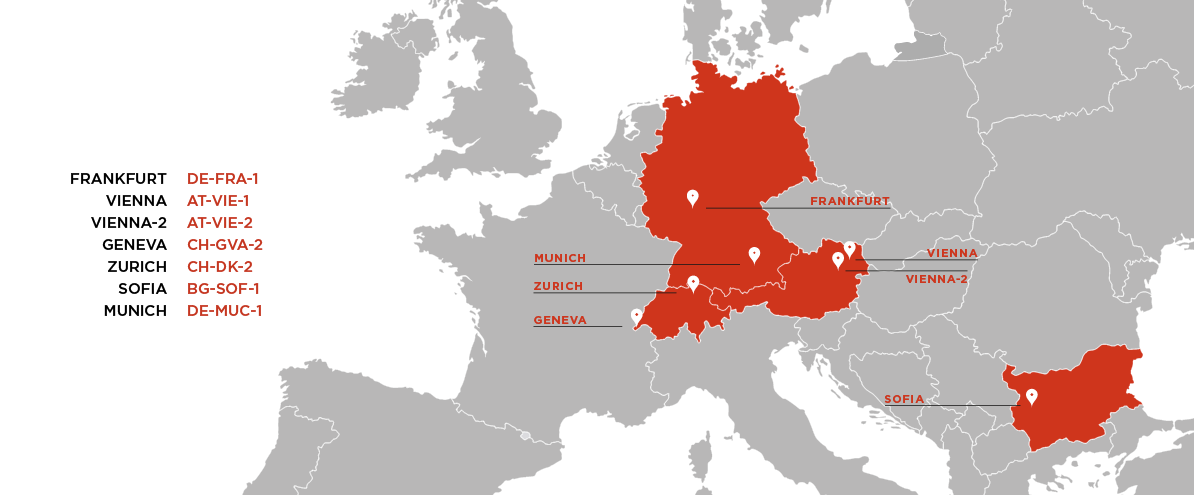

Exoscale Zones

At the time of writing this article, Exoscale has 7 zones, each zone representing a datacenter. Zones are totally isolated from each other and each one has its own hardware to provision compute instances (read Virtual Machines), network, object storage, and block storage.

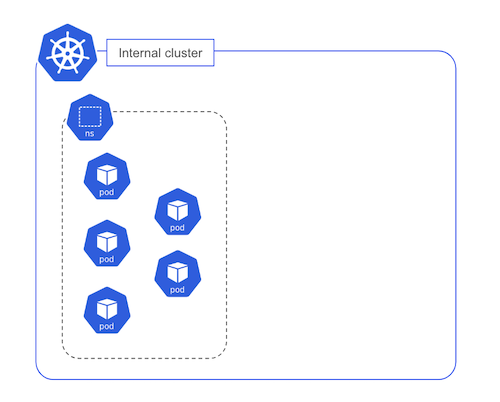

Internal Kubernetes cluster for each zone

In each zone, an internal Kubernetes cluster runs different kinds of workloads such as:

- the public facing Exoscale API for the zone

- the internal API to manage the entire infrastructure

- internal orchestrators

- …

Each zone has its own cluster running internal workloads.

sks-orchestrator

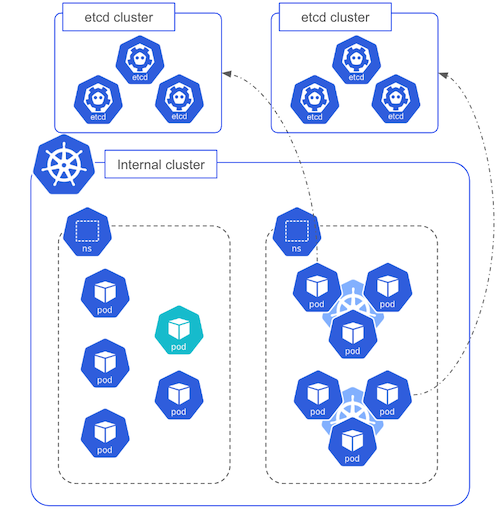

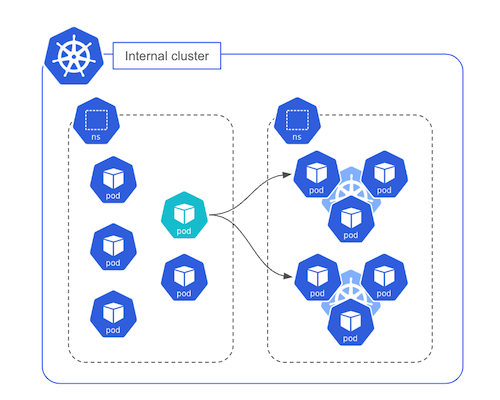

Among the workload running on each zonal cluster, the sks-orchestrator is in charge of managing the users’ clusters. It runs as a Deployment with multiple replicas (only one represented in the schema below).

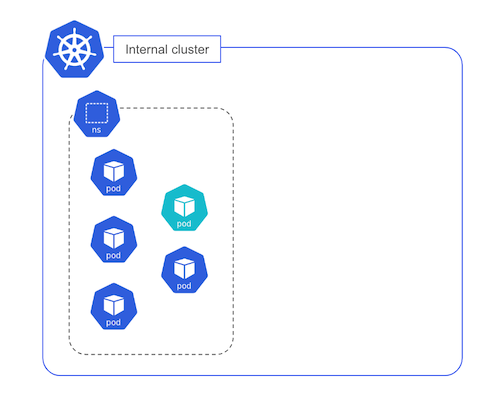

sks-orchestrator is one of the internal workload of the zonal cluster, it is in charge of managing the control plane of the SKS clusters. It deploys, in Pods, the core components for each cluster’s control plane:

- API Server

- Scheduler

- Controller Manager

The following schema shows 2 control planes, each one is responsible for a single SKS cluster.

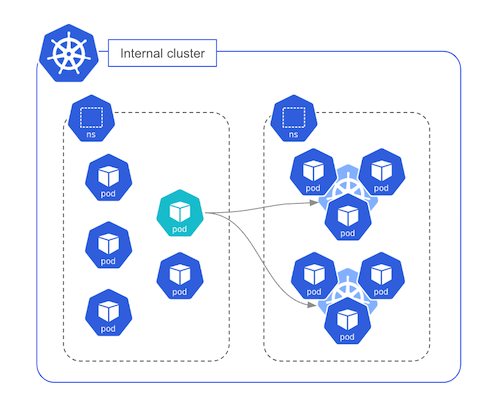

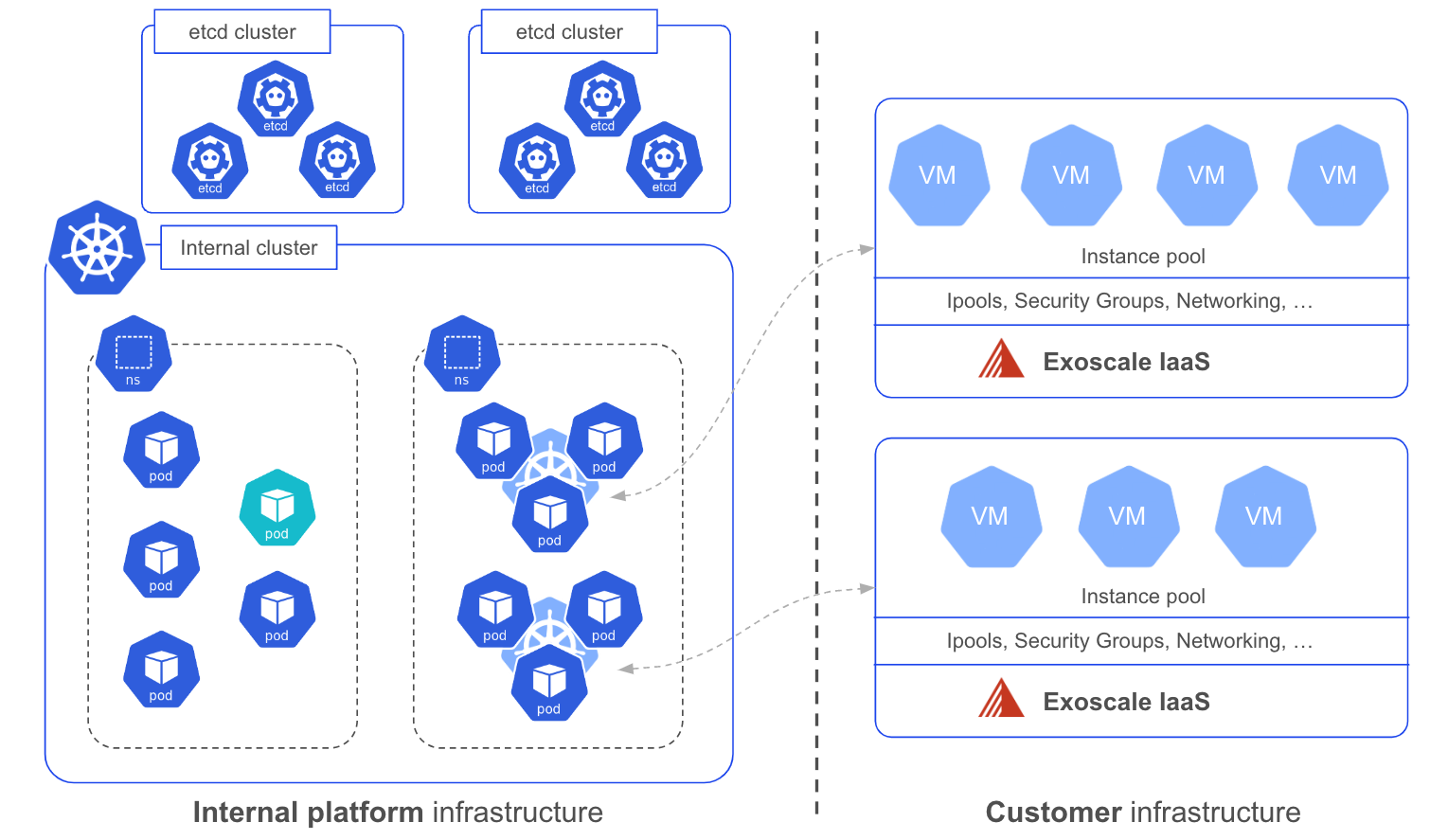

Zonal etcd clusters

On top of the API Server, Scheduler and Controller Manager, a cluster’s control plane requires etcd to store its current state. Currently, an etcd cluster is deployed in each zone and shared by the kubernetes control planes. In the future, depending on the size and criticality of a cluster, a dedicated etcd cluster could be deployed for an individual cluster. To illustrate this, the following schema shows one etcd cluster per control plane.

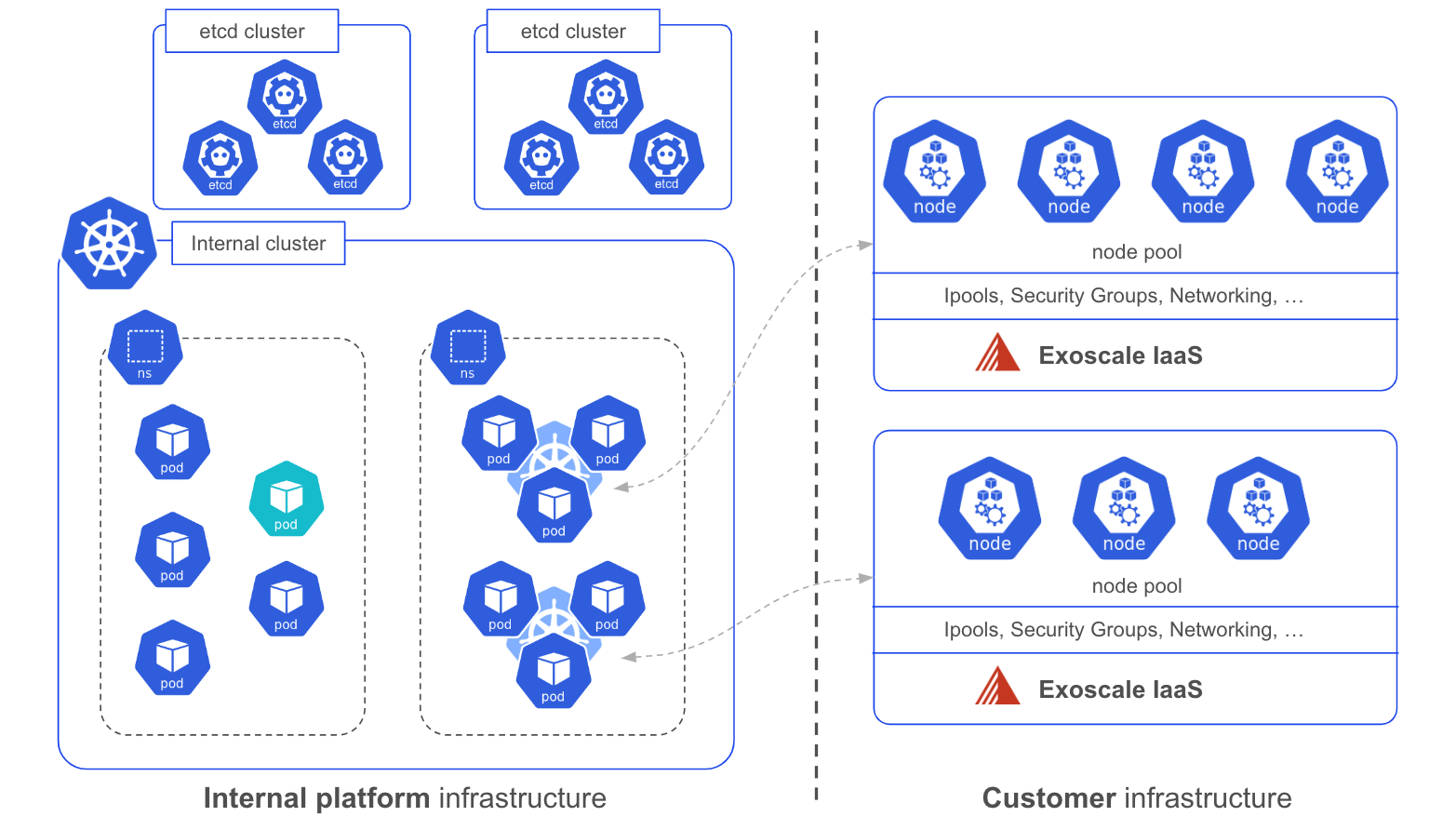

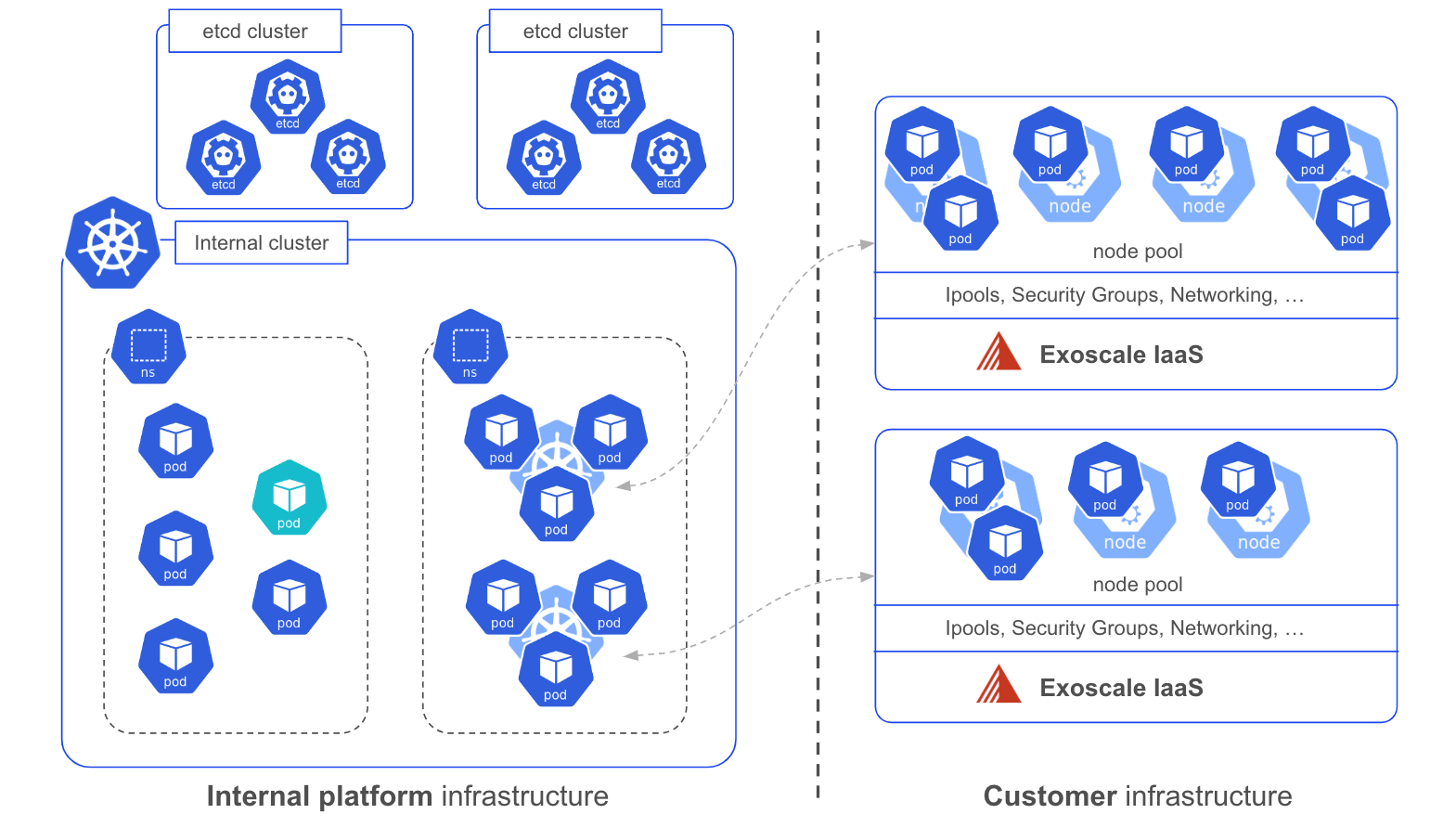

Provisioning worker nodes

Once a cluster’s control plane is provisioned, worker nodes can be added using the web portal, the exo CLI or IaC tools like Terraform or Pulumi. This process first creates an instance pool, which is a group of virtual machines with the same technical specifications, based on the user preferences:

- Number of instance in this pool

- Instance type (e.g., Ubuntu Linux, OpenBSD, …)

- Amount of RAM

- Number of vCPU

- Disk size

The diagram below shows one instance pool for each control plane.

The instances within these instance pools are booted using a custom template in which components such as containerd and kubelet are pre-installed. cloud-init is currently used to configure the settings that are unknown when the template is built (e.g. Kubelet’s TLS bootstrap token, apiserver endpoint, initial label & taints,…). This process turns the instance pool into Kubernetes Nodepool, each instance becoming a worker node joining the cluster using the TLS bootstrap feature as described in the official documentation.

The cluster is ready to be used, users can now deploy their applications.

Lifecycle

The control plane of a SKS cluster can be upgraded automatically or manually. To be upgraded automatically, the Automated Upgrade option must be enabled at creation time or after the cluster is created. The manual upgrade can be triggered using the Exoscale console or the exo CLI. The upgrade process is as follows.

When a new version of mainstream Kubernetes is released, a new manifest template is generated for each of the control plane component (API Server, Scheduler, Controller Manager). First, the template is used to create the manifest for the component, and then a hash is calculated for this manifest. The sks-orchestrator is responsible for comparing this new hash with the one of the manifest currently in use. If they differ, the new manifest is applied, thus upgrading the control plane component.

Technical considerations

The good

- The provisioning of a SKS is really fast, usually in the 2 minutes range

- Users have no access to the control plane and cannot alter it

- It is resilient as managed by Kubernetes under the hood

- The pro plan benefits from autoscaling allowing multiple API Server Pods based on the resources usages

- The upgrade of minor versions is fully managed

The not so good

As the API Server of each SKS cluster is exposed with a NodePort Service in the internal zonal cluster, the number of SKS clusters per zone is currently limited to the number of available NodePort, which is 2767 by default as NodePort are in the 30000-32767 range. This range is a parameter of the API Server, thus it could be modified to increase the number of NodePort available.

Currently, users cannot check the status of their control plane. Work is in progress to give them more observability.

Key takeaways

Each cloud provider has its own internal infrastructure and delivers components of its IaaS offering in its own way to the customers. Exoscale leverages its internal Kubernetes based platform to manage the SKS clusters used by its customers. This Kubernetes in Kubernetes approach is constantly evolving to ensure the delivery of a resilient, scalable and secure solution.

This article is based on the presentation “Kubernetesception, How to run 1000 control planes” from Remy Rojas, Software Engineer @ Exoscale, during Container Days Conference 2024.